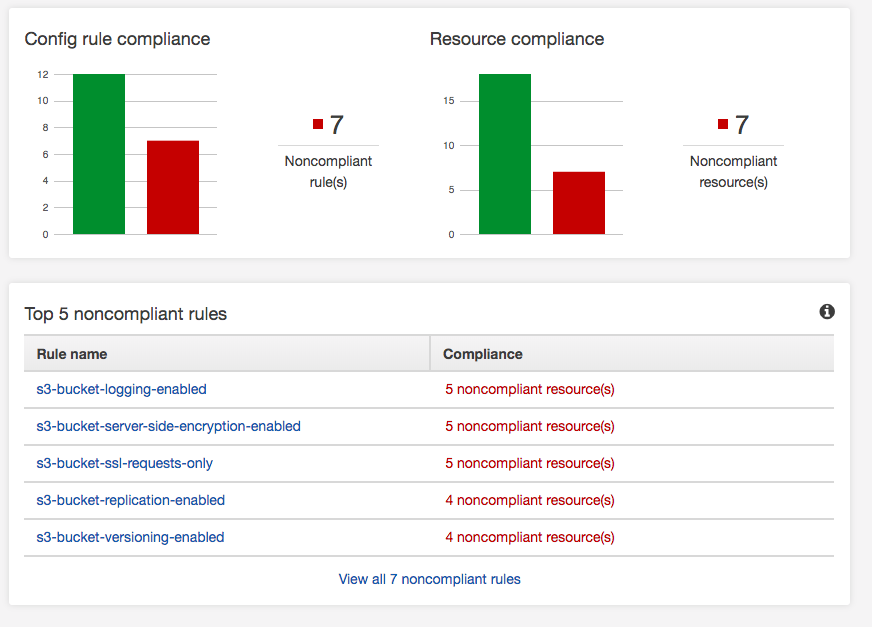

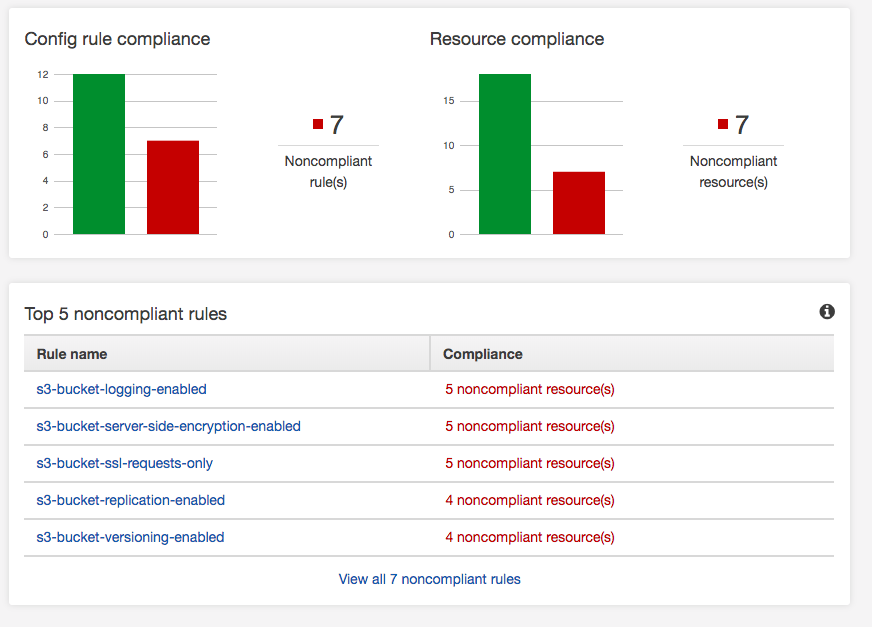

If you have an AWS deployment, make sure you turn on AWS Config. It has a whole bunch of built-in rules, and you can add your own to validate the security of your AWS environment as it relates to AWS services. Amazon provides good documentation, a GitHub repo, and SumoLogic does a quick How-to turn it on. It’s straightforward to turn on and use. AWS provides some pre-configured rules, and that’s what this AWS environment will validate against. There is a screenshot below of the results. Aside from turning it on, you have to decide which rules are valid for you. For instance, not all S3 buckets have business requirements to replicate, so I’d expect this to always be a noncompliant resource. However, one of my findings yesterday was missing EBS encrypted volumes. In order to make EBS volumes encrypted its 9 easy steps:

However, one of my findings yesterday was missing EBS encrypted volumes. In order to make EBS volumes encrypted its 9 easy steps:

-

Make a snapshot of the EBS Volumes.

-

Copy the snapshot of the EBS Volume to a Snapshot, but select encryption. Use the AWS KMS key you prefer or Amazon default aws/ebs.

-

Create an AMI image from the encrypted Snapshot.

-

Launch the AMI image from the encrypted Snapshot to create a new instance.

-

Check the new instance is functioning correctly, and there are no issues.

-

Update EIPs, load balancers, DNS, etc. to point to the new instance.

-

Stop the old un-encrypted instances.

-

Delete the un-encrypted snapshots.

-

Terminate the old un-encrypted instances.

Remember KMS gives you 20,000 request per month for free, then the service is billable.

If you have an AWS deployment, make sure you turn on AWS Config. It has a whole bunch of built-in rules, and you can add your own to validate the security of your AWS environment as it relates to AWS services. Amazon provides good documentation, a

Amazon is crashing on Prime Day, made breaking news. Appears the company is having issues with the traffic load.

Given Amazon runs from AWS as of 2011. Not a great sign for either Amazon or the scalability model they deployed on AWS.

Amazon is crashing on Prime Day, made breaking news. Appears the company is having issues with the traffic load.

Given Amazon runs from AWS as of 2011. Not a great sign for either Amazon or the scalability model they deployed on...

Why do agencies post minimum security standards? The UK government recently released a minimum security standards document which all departments must meet or exceed. The document is available here: Minimum Cyber Security Standard.

The document is concise, short, and clear. It contains some relevant items for decent security, covering most common practices over the last 10 years. I’m not a UK citizen, but if agencies are protecting my data, why do they have to meet minimum standards. If an insurer was using the minimum standards, it would be “lowest acceptable criteria that a risk must meet in order to be insured”. Do I really want to be in that class lowest acceptable criteria for a risk to my data and privacy?

Given now, you know government agencies apply minimum standards, let’s look at breach data. Breaches are becoming more common and more expensive and this is confirmed by a report from Ponemon Institue commissioned by IBM. The report states that a Breach will cost $3.86 million, and the kicker is that there is a recurrence 27.8% of the time.

There two other figures in this report that astound me:

That means that after a company is breached, it takes on average 6 months to identify the breach and 2 months to contain it. The report goes on to say that 27% of the time a breach is due to human error and 25% of the time because of a system glitch.

So interpolate this, someone or system makes a mistake and it takes 6 months to identify and 2 months to contain. Those numbers should be scaring every CISO, CIO, CTO, other executives, security architects, as the biggest security threats are people and systems working for the company.

Maybe it’s time to move away from minimum standards and start forcing agencies and companies to adhere to a set of best practices for data security?

Why do agencies post minimum security standards? The UK government recently released a minimum security standards document which all departments must meet or exceed. The document is available here: Minimum Cyber Security Standard.

The document is concise, short, and clear. It contains some relevant items for decent security, covering...

Let’s start with some basics of software development. It still seems no matter what methodology of software development lifecycle that is followed it includes some level of definition, development, QA, UAT, and Production Release. Somewhere in the process, there is a merge of multiple items into a release. This still means your release to production could be monolithic.

The mighty big players like Google, Facebook, and Netflix (click any of them to see their development process) have revolutionized the concept of Continous Integration (CI) and Continous Deployment (CD).

I want to question the future of CI/CD, instead of consolidating a release, why not release a single item into production, validate over a defined period of time and push the next release. This entire process would happen automatically based on a queue (FIFO) system.

Taking it to the world of corporate IT and SaaS Platforms. I’m really thinking about software like Salesforce Commerce Cloud, or Oracle’s NetSuite. I would want the SaaS platform to provide me this FIFO system to load my user code updates. The system would push and update the existing code, while it continues to handle the requests and the users wouldn’t see discrepancies. Some validation would happen, the code would activate and a timer would start on the next release. If validation failed the code could be rolled back automatically or manually.

Could this be a reality?

Let’s start with some basics of software development. It still seems no matter what methodology of software development lifecycle that is followed it includes some level of definition, development, QA, UAT, and Production Release. Somewhere in the process, there is a merge of multiple items into a release....

IPv6 implemented Anycast for many benefits. The premise behind Anycast is multiple nodes can share the same address, and the network routes the traffic to the Anycast interface address closest to the nearest neighbor.

There is a lot of information on it for the Internet as it relates to IPv6. Starting with a deep dive in the RFC RFC 4291 - IP Version 6 Addressing Architecture. Also, there is a document on Cisco Information IPv6 Configuration Guide.

The more interesting item which was a technical interview topic this week was the extension into IPv4. The basic premise is that BGP can have multiple subnets in different geographic regions with the same IP address and because of how internet routing works, traffic to that address is routed to the closest address based on BGP path.

However, this presents two issues if the path in BGP disappears that means the traffic would end up at another node, which would present state issues. The other issues are with BGP as it routes based on path length. So depending on how upstream ISP is peered and routed, a node physically closer, could not be in the preferred path and therefore add latency.

One of the concepts behind this is DDoS Mitigation, which is deployed with the Root Name Servers and also CDN providers. Several RFC papers discuss Anycast as a possible DDoS Mitigation technique:

RFC 7094 - Architectural Considerations of IP Anycast

RFC 4786 - Operation of Anycast Services

CloudFlare(a CDN provider) discusses their Anycast Solution: What is Anycast.

Finally, I’m a big advocate of conference papers, maybe because of my Master’s degree or 20 years ago if you wanted to learn something it was either from a book or post-conference proceedings. In the research, for this blog article, I came across a well-written research paper from 2015 on the topic of DDoS mitigation with Anycast Characterizing IPv4 Anycast Adoption and Deployment. It’s definitely worth a read, and especially on interesting how Anycast has been deployed to protect the Root DNS servers and CDNs.

IPv6 implemented Anycast for many benefits. The premise behind Anycast is multiple nodes can share the same address, and the network routes the traffic to the Anycast interface address closest to the nearest neighbor.

There is a lot of information on it for the Internet as it relates to IPv6. ...

I see a lot of trends between Containers in 2018 and the server virtualization movement started in 2001 with VMWare. So I started taking a trip down memory lane. My history started in 2003/2004 when I was leveraging Virtualization for datacenter and server consolation. At IBM we were pushing it to consolidate unused server capacity especially in test and development environments with IT leadership. The delivery primary focused on VMWare GSX and local storage initially. I recall the release of vMotion and additional Storage Virtualization tools, lead to a deserve to move from local storage to SAN-based storage. That allowed us to discuss the reduction of downtime and potential for production deployments. I also remember there was much buzz when EMC in 2004 acquired VMWare and it made sense given the push into Storage Virtualization.

Back then it was the promise of reduced cost, smaller data center footprint, improved development environments, and better resource utilization. Sounds like the promises of Cloud and Containers today.

I see a lot of trends between Containers in 2018 and the server virtualization movement started in 2001 with VMWare. So I started taking a trip down memory lane. My history started in 2003/2004 when I was leveraging Virtualization for datacenter and server consolation. At IBM we were pushing it...

Serverless is becoming the 2018 technology hype. I remember when containers were gaining traction in 2012, and Docker in 2013. At technology conventions, all the cool developers were using containers. It solved a lot of challenges, but it was not a silver bullet. (But that’s a blog article for another day.)

Today after an interview I was asking myself, have Containers lived up to the hype? They are great for CI/CD, getting rid of system administrator bottlenecks, helping with rapid deployment, and some would argue fundamental to DevOps. So I started researching the hype. People over at Cloud Foundry published a container report in 2017 and 2016.

Per the 2016 report, “our survey, a majority of companies (53%) had either deployed (22%) or were in the process of evaluating (31%) containers.”

Per the 2017 report, “increase of 14 points among users and evaluators for a total of 67 percent using (25%) or evaluating (42%).”

As a former technology VP/director/manager, I was always evaluating technology which had some potential to save costs, improve processes, speed development and improve production deployments. But a 25% adaption rate and a 3% uptick over last year, is not moving the technology needle.

However, I am starting to see the same trend, Serverless is the new exciting technology which is going to solve the development challenges, save costs, improve the development process and you are cool if you’re using it. But is it really Serverless or just a simpler way to use a container?

AWS Lambda is basically a container. (Another blog article will dig into the underpinnings of Lambda.) Where does the container run? ** A Server. **

Just means I don’t have to understand the underlying container, server etc.etc.etc. So is it truly serverless? Or is it just the 2018 technology hype to get all us development geeks excited, we don’t need to learn Docker or Kubernetes, or ask our Sysadmin friends provision us another server.

Let me know your thoughts.

Serverless is becoming the 2018 technology hype. I remember when containers were gaining traction in 2012, and Docker in 2013. At technology conventions, all the cool developers were using containers. It solved a lot of challenges, but it was not a silver bullet. (But that’s a blog article for another...

Got my CCNA certificate today via email. Far from the day of getting a beautiful package in the mail. The best is how Cisco lets you recertify after a long hiatus.

Got my CCNA certificate today via email. Far from the day of getting a beautiful package in the mail. The best is how Cisco lets you recertify after a long hiatus.

I think Certification logos are interesting, I would not include them in emails, but some do. Also, I would probably not include certifications in an email signature anymore.

Here are the ones I’ve collected in the last few weeks. I think moving forward, I’ll update the site header to...

This morning I sat and passed Cisco 200-301 Designing for Cisco Internetwork Solutions. The exam is not easy, it required an 860 to pass the exam. 17 years ago when I took it only required a 755. I got 844 17 years ago. This time I got an 884. It’s a tough exam as it requires deep and broad networking knowledge across all domains routing, switching, unified communications, WLANs and how to use them in network designs.

That exam officially gives me a CCDA. That officially makes 7 certifications (5 AWS and 2 Cisco) in 5 weeks.

Next up is the Cisco Exam for 300-101 ROUTE.

This morning I sat and passed Cisco 200-301 Designing for Cisco Internetwork Solutions. The exam is not easy, it required an 860 to pass the exam. 17 years ago when I took it only required a 755. I got 844 17 years ago. This time I...

Had a strong technical interview today. The interviewer asked questions about the topics in this outline.

-

Virtualization and Hypervisors

-

Security

-

Docker and Kubernetes

-

OpenStack/CloudStack - which I lack experience

-

Chef

- Security of data - see Databags

-

CloudFormation - immutable infrastructure

-

DNS

-

Bind

-

Route53

-

Anycast

- Resolvers routes to different servers

-

Cloud

-

Azure

-

Moving Monolithic application to AWS

-

Explain the underpinnings of IaaS, PaaS, SaaS.

These were all great questions for a technical interview. The interviewer was easy to converse and it was much more of a great discussion than an interview. The breadth and depth of the interview questions were impressive. I was very impressed with the answers to my questions by the interviewer. I left the interview hoping that the interviewer would become a colleague.

Had a strong technical interview today. The interviewer asked questions about the topics in this outline.

I passed the 200-125 CCNA exam today. Actually scored higher than I did 17 years ago. However the old CCNA covered much more material. Technically per Cisco guidelines it’s 3 - 5 days before I become officially certified.

Primarily I used VIRL to get the necessary hands-on experience and Ciscopress CCNA study guide. Wendell Odom always does a good job and his blog is beneficial in studying. The practice tests from MeasureUp are ok, but I wouldn’t get them again.

Next up the 200-310 DESGN.

I passed the 200-125 CCNA exam today. Actually scored higher than I did 17 years ago. However the old CCNA covered much more material. Technically per Cisco guidelines it’s 3 - 5 days before I become officially certified.

Primarily I used VIRL to get the necessary...

Last time, I started studying for Cisco Certifications, I built a 6 router one switch lab on my desk. One router had console ports for all the other routers, and the management port was connected to my home network so I could telnet into each of the routers via their console ports. It was exciting and a great way to learn and stimulate complex configurations. The routers had just enough power to run BGP and IPSec tunnels.

This time, I found VIRL, which is interesting as you build a network inside an Eclipse environment. On the backend, the simulator creates a network of multiple VMs.

So far, I built a simple switch network. I’m using it with the Cloud service Packet as the memory and CPU requirements exceed my laptop. Packet provides a bare-metal server which is required for how VIRL does a pxe-boot. I wish there was a bare-metal option on AWS.

I’m still trying to figure out how to upload complex configurations and troubleshoot them.

The product is very interesting as it provides a learning environment for a few hundred dollars vs. the couple thousand which I spent last time to build my lab.

Last time, I started studying for Cisco Certifications, I built a 6 router one switch lab on my desk. One router had console ports for all the other routers, and the management port was connected to my home network so I could telnet into each of the routers via their...

However, one of my findings yesterday was missing EBS encrypted volumes. In order to make EBS volumes encrypted its 9 easy steps:

However, one of my findings yesterday was missing EBS encrypted volumes. In order to make EBS volumes encrypted its 9 easy steps: