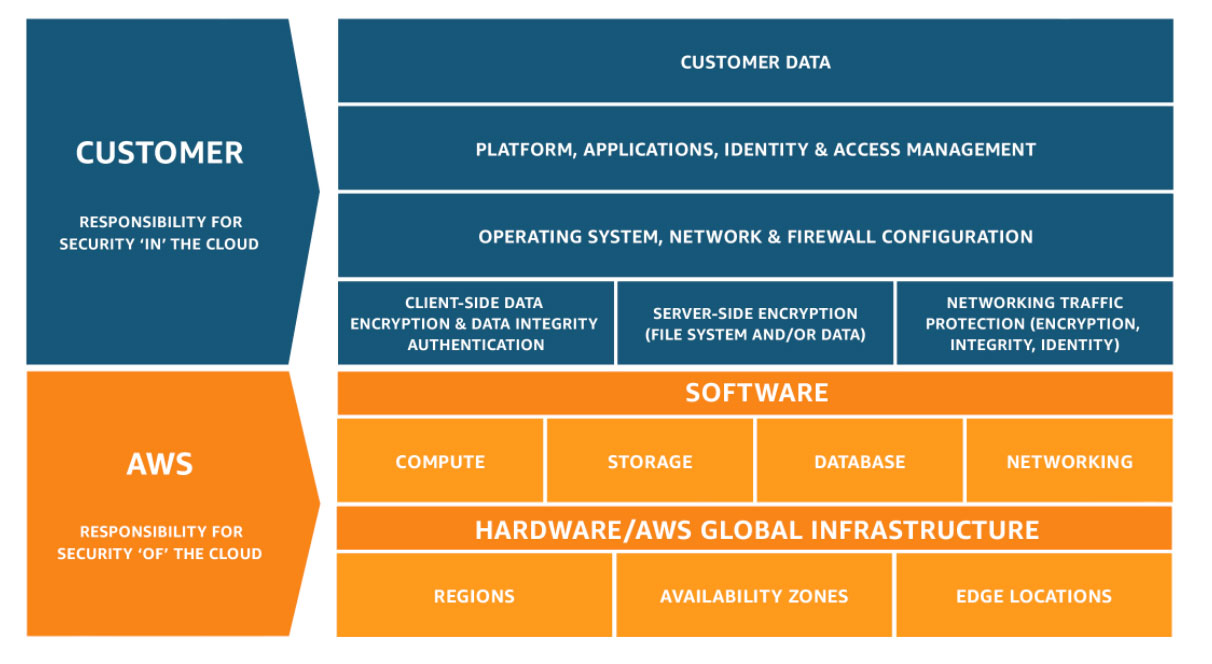

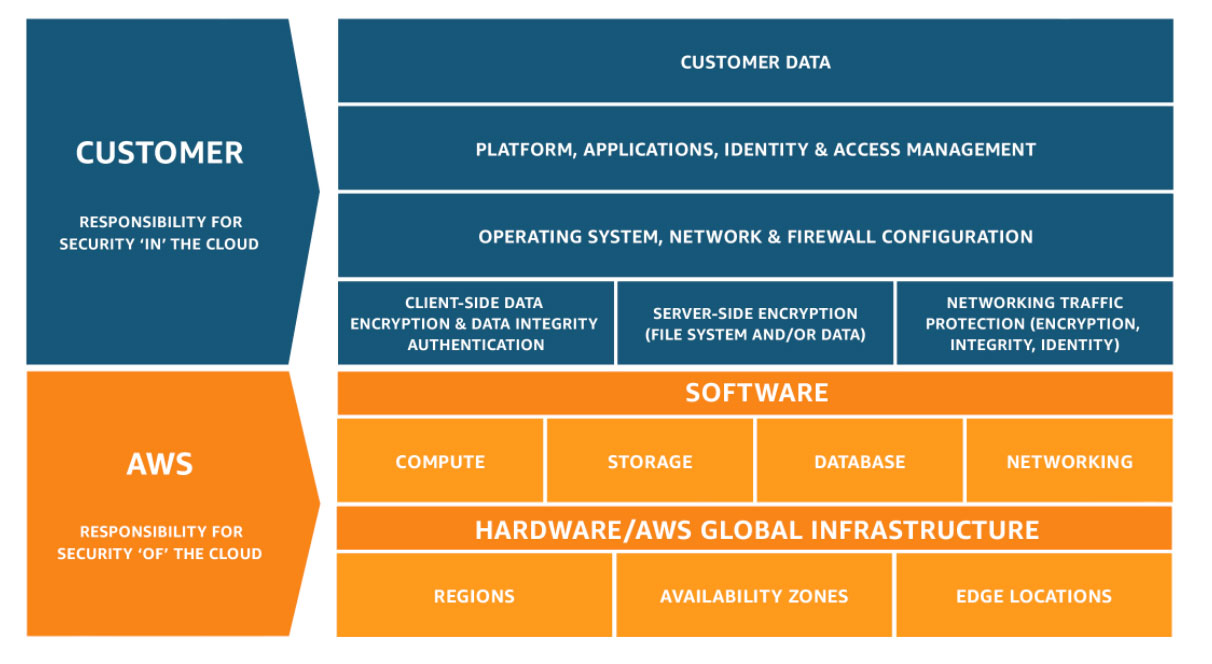

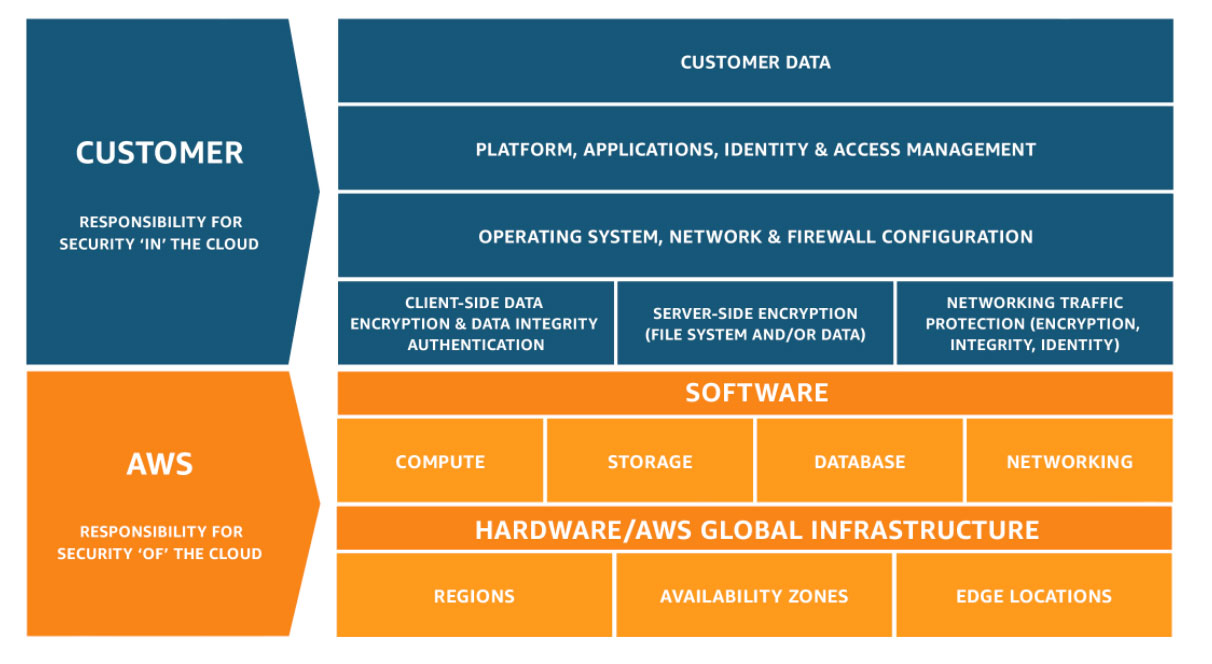

AWS has the security shared responsibility model.

Anyone on the AWS platform understands where this model. However, security on AWS is not easy as AWS has always been a platform of innovation. AWS has released a ton of services AWS Config, Macie, Shield, Web Application Firewall, SCPs. Over the years, Landing zones and then Control Tower which builds security when starting multi-account on AWS. Lastly, the Well Architected Security Pillar to review and confirm your workload is well architected.

Last month, AWS released a comprehensive guide to a Security Reference Architecture. It was built by Professional Services, which is the customer implementation arm of AWS.

I’m not going to try to summarize a 62-page document in a blog article. Mainly the document is about defense-in-depth, which is security at each layer of the workload. There two key observations from the document. The first observation is it does follow Control Tower guidance. Terms have been changed. It requires workloads to be in separate OU from security and infrastructure(shared services). Again these are general security principles that limit blast radius if an application or account is compromised. Security account and log collection account need to be separate. This Control Tower recommended an OU structure. Also, keeping log data in an immutable state is best for audit analysis.

The second observation is it now talks about leverage the Infrastructure account for Egress and Ingress traffic to the internet. This is only possible with Transit Gateway or a Transit VPC, defined in the document but not mentioned as part of the VPC diagram.

Maybe it’s just because of hyperfocus on renewing certifications. However, I notice the bleed-over between networking and security and how proper networking architecture is to start good security hygiene.

AWS has the security shared responsibility model.

Anyone on the AWS platform understands where this model. However, security on AWS is not easy as AWS has always been a platform of innovation. AWS has released a ton of services AWS Config,

Dark Reading wrote a Blog Architect entitled 6 Reasons Why Employees Violate Security Policies

The 6 reasons according to the article are:

- Ignorance

- Convenience

- Frustration

- Ambition

- Curiosity

- Helpfulness

I think they’re neglecting to get to the root of the issue which is draconian security policies which don’t make things more secure. Over the years, I’ve seen similar policies coming from InfoSec groups. It’s common for developers to want to use the tools they’re comfortable with, in an extreme case I’ve seen developers wanting to use Eclipse to do development and Eclipse is forbidden because the only safe editor according to some InfoSec policy is VI (probably slightly exaggerated). Other extreme cases include banning of Evernote or OneNote because it uses cloud storage. I’m assuming in this that someone is not putting all there confidential customer data in a OneNote book.

Given what I’ve seen, employee violates security policies to get work done, the way they want to do it. Maybe that ignorance, convenience, frustration, ambition, or any other topic, or maybe if you’ve used something for 10 years, you don’t want to have to learn something new for development or keeping notes, given there are many other things to learn and do which add value to their job and employer.

Maybe to keep employees from violating InfoSec policies, InfoSec groups instead of writing draconian security policies could focus on identifying security vulnerabilities which are more likely targets of hackers, putting policies, procedures and operational security around them. Lastly, InfoSec could spend time educating what confidential data is and where it is allowed to stored.

Disclaimer: This blog article is not meant to condone, encourage, or motivate people to violate security policies.

Dark Reading wrote a Blog Architect entitled 6 Reasons Why Employees Violate Security Policies The 6 reasons according to the article are:

- Ignorance

- Convenience

- Frustration

- Ambition

- Curiosity

- Helpfulness

I think they’re neglecting to get to the root of the issue which is draconian security policies which don’t...

I was listening to the Architech podcast. There was a question asked, ”Does everything today tie back to Kubernetes?” The more general version of the question is, “Does everything today tie back to containers?”. The answer is quickly becoming yes. Something Google figured out years ago with its environment that everything was containerized is becoming mainstream.

To support this Amazon now has 3 different Container technologies and one in the works.

ECS which is Amazon’s first container offering. ECS is container orchestration which supports Docker containers.

Fairgate ECS which is managed offering of ECS where all you do is deploy Docker images and AWS owns full management. More exciting is that Fairgate for EKS has been announced and pending release. This will be a fully managed Kubernetes.

EKS is the latest offering which was GA’d in June. This is a fully managed control plane for Kubernetes. The worker nodes are EC2 instances you manage, which can run an Amazon Linux AMI or one you create.

Lately, I’ve been exploring EKS so that will be the next blog article, how to get started on EKS.

In the meantime, what have you containerized today?

I was listening to the Architech podcast. There was a question asked, ”Does everything today tie back to Kubernetes?” The more general version of the question is, “Does everything today tie back to containers?”. The answer is quickly becoming yes. Something Google figured out years ago with its...

A new study sponsored by Capsule8, Duo Security, and Signal Sciences was published about Cloud Native Application Security. Cloud Native Applications are applications specifically built for the Cloud. The study entitled, The State of Cloud Native Security. The observations and conclusions of the survey are interesting. What was surprising is the complete lack of discussion of moving the traditional SECOPS to a SecDevOps model.

The other item, which found shocking with all the recent breaches, that page 22 shows that only 71% of the surveyed companies have a SECOPs function.

A new study sponsored by Capsule8, Duo Security, and Signal Sciences was published about Cloud Native Application Security. Cloud Native Applications are applications specifically built for the Cloud. The study entitled, The State of Cloud Native Security. The observations and conclusions of the survey are interesting. What was surprising is...

DevOps is well defined and has a great definition Wikipedia. We could argue all day about who is really doing DevOps(see this post for context). Let’s assume that there is efficient and effective DevOps organization, If this is the case, DevOps requires a partner in security. Security needs to manage compliance, security governance, requirements and risks. This requires functions in development, operations and analysis. How can security keep up with effective DevOps? Building DevOps organization for security which we call SecDevOps. SecDevOps is about automating and monitoring compliance, security governance, requirements and risks, and especially updating analysis as DevOps changes the environment.

As organization support DevOps but don’t seem as ready to support SecDevOps, how can security organization evolve to support DevOps?

DevOps is well defined and has a great definition Wikipedia. We could argue all day about who is really doing DevOps(see this post for context). Let’s assume that there is efficient and effective DevOps organization, If this is the case, DevOps requires a partner in security. Security needs...

Amazon recently released a presentation on Data-safe Cloud. It appears to be based on some Gartner question and other data AWS collected. The presentation discusses 6 core benefits of a secure cloud.

- Inherit Strong Security and Compliance Controls

- Scale with Enhanced Visibility and Control

- Protect Your Privacy and Data

- Find Trusted Security Partners and Solutions

- Use Automation to Improve Security and Save Time

- Continually Improve with Security Features.

I find this marketing material to be confusing at best, let’s analyze what it is saying.

For point 1, Inherit Strong and Compliance Controls, which reference all the compliance AWS achieves. However, it loses track of the shared responsibility model and doesn’t even mention until page 16. Amazon has compliance in place which is exceptional, and most data center operators or SaaS providers struggle to achieve. This does not mean my data or services running within the Amazon environment meet those compliances

For point 2, 4 and 6 those are not benefits of the secure cloud. Those might be high-level objects one uses to form a strategy on how to get to a secure cloud.

Point 3 I don’t even understand, the protection of privacy and data has to be the number one concern when building out workloads in the cloud or private data centers. It’s not a benefit of the secure cloud, but a requirement.

For point 5, I am a big fan of automation and automating everything. Again this is not a benefit of a secure cloud, but how to have a repeatable, secure process wrapped in automation which leads to a secure cloud.

Given the discussions around cloud and security given all the negative press, including the recent AWS S3 Godaddy Bucket exposure, Amazon should be publishing better content to help move forward the security discussion.

Amazon recently released a presentation on Data-safe Cloud. It appears to be based on some Gartner question and other data AWS collected. The presentation discusses 6 core benefits of a secure cloud.

- Inherit Strong Security and Compliance Controls

- Scale with Enhanced Visibility and Control

- Protect Your Privacy and Data

...

One of the things I’ve been fascinated of late is the concept of Security as Code. I’ve just started to read the book DevOpSec by Jim Bird. One of the things the book talks about is injecting security into the CI/CD pipeline for applications. Basically merging developers and security, as DevOps merged developers and operations. I’ve argued for years DevOps is a lot of things, but fundamentally it was a way for operations to become part of the development process which led to the automation of routine operational tasks and recovery. So now if we look at DevOpsSec, this would assume security is part of the development process. I mean more than just the standard code analysis using Veracode. What would it mean if security processes and recovery could be automated?

Security Operations Centers (SOCs) where people are interpreting security events and reacting. Over the last few years, much of the improvements in SOCs has been made via AI and machine learning reducing the head count required to operate a SOC. What if security operations were automated? Could some code be generated based on the security triggers and provided to the developer for review and incorporation into the next release?

We talk about infrastructure as code, where some data can be generated to create rules and infrastructure using automation. Obviously on AWS you can install security tool based AMIs, Security Groups and NACLs with Cloudformation. My thoughts go to firewall based AMIs, appliances for external access. The appliance access-lists required are complex, require enormous review and processing within an organization. Could access lists be constructed based on a mapping of the code and automatically generated for review? Could the generated access list be compared against existing access-list for deduplication detection.

It’s definitely an interesting topic and hopefully evolves over the next few years.

One of the things I’ve been fascinated of late is the concept of Security as Code. I’ve just started to read the book DevOpSec by Jim Bird. One of the things the book talks about is injecting security into the CI/CD pipeline for applications. Basically merging developers and security,...

A longtime issue with networking vendors is providing ports at one speed and the throughput at another speed. I remember dealing with it back in 2005 with the first generation of Cisco ASA’s which primarily replaced the PIX Firewall. Those firewalls provided 1Gbps ports, but the throughput the ASA could handle was about half that bandwidth.

Some marketing genius created the term wire speed and throughput.

If you’re curious about this go look at Cisco Firepower NGFW firewalls. The 4100 series have 40Gbps interfaces, but depending on the model throughput is between 10Gbps and 24Gbps with FW+AVC+IPS turned on.

I have referenced several Cisco devices, but it’s not a specific issue to Cisco. Take a look at Palo Alto Networks Firewalls specifically the PA-52XX have four 40Gbps ports, but can support between 9Gbps and 30Gbps of throughput with full threat protection on.

The technology exists so why aren’t networking vendors able to provide wire-speed throughput between ports, even with the full inspection of traffic turned on? I would very like to know your thoughts on this topic please leave a comment.

A longtime issue with networking vendors is providing ports at one speed and the throughput at another speed. I remember dealing with it back in 2005 with the first generation of Cisco ASA’s which primarily replaced the PIX Firewall. Those firewalls provided 1Gbps ports, but the throughput the ASA could...

DDoS attacks are too frequent on the internet. A DDoS attack sends more requests that can be processed. Many times, the requestors machine has been compromised to be part of a more massive DDoS network. This article is not going to explain all the various types as there is a whole list of them here. Let’s discuss a particular type of DDoS attack designed to overwhelm your web server. This traffic will appear as legitimate requests using GET or POST Requests. GET would be for /index.html or any other page at 50 requests per minute. A POST would hit your myApi.php and attempt to post data at 50 plus requests per minute.

This is going to focus on some recommendations using AWS and other technologies to stop a recent HTTP DDoS attacks. The first step is to identify the DDoS attack versus regular traffic. The second question is how does one prevent a DDoS HTTP attack.

Identifying a DDoS attack there various DDoS

The first step is to understand your existing traffic, if you have 2,000 requests per day and all of a sudden you have 2,000,000 requests in the morning, its a good indication it’s under a DDoS attack. The easiest way to identify this is to look at the access_log and pull this into a monitoring service like Splunk, AllenVault, Graylog, etc. From there trend analysis in real-time would show the issues. If the Web servers are behind an ALB make sure the ALB is logging requests and that those requests are being analysis instead of the web server access logs. ALB still support the X-Forwarded-For so it can be passed.

Preventing a DDoS attack

There is no way to truly prevent an HTTP DDoS attack. Specifically to deal with this event, the following mitigation techniques the were explored:

-

AWS Shield - this provides advance WAF functions, and there rules to limit.

-

The free-ware would be to use Apache and NGINX have rate limited for specific IP addresses. In Apache, this is implemented by a number of modules. ModSecurity is usually at the top of the list, a great configuration example is available on Github which includes the X-Forwarded-For.

-

An EC2 instance in front of the web server can be run as a Proxy. The proxy can be configured to suppress the traffic using ModSecurity or other MarketPlace offerings including other WAF options.

-

The ALB or CloudFront can deploy an AWS WAF.

-

Lastly, the most expensive option is to deploy Auto-scaling groups to absorb all traffic.

Please leave a comment if there other options which should have been investigated.

To solve this specific issue, an AWS WAF was deployed on the ALB. One thing to consider is to make sure to prevent attacks from directly hitting the website. This is easily accomplished by allowing HTTP/HTTPS from anywhere only to the ALB. ALB and EC2 instance sharing a security group which allows HTTPS/HTTP to everything in that security group.

DDoS attacks are too frequent on the internet. A DDoS attack sends more requests that can be processed. Many times, the requestors machine has been compromised to be part of a more massive DDoS network. This article is not going to explain all the various types as there is a...

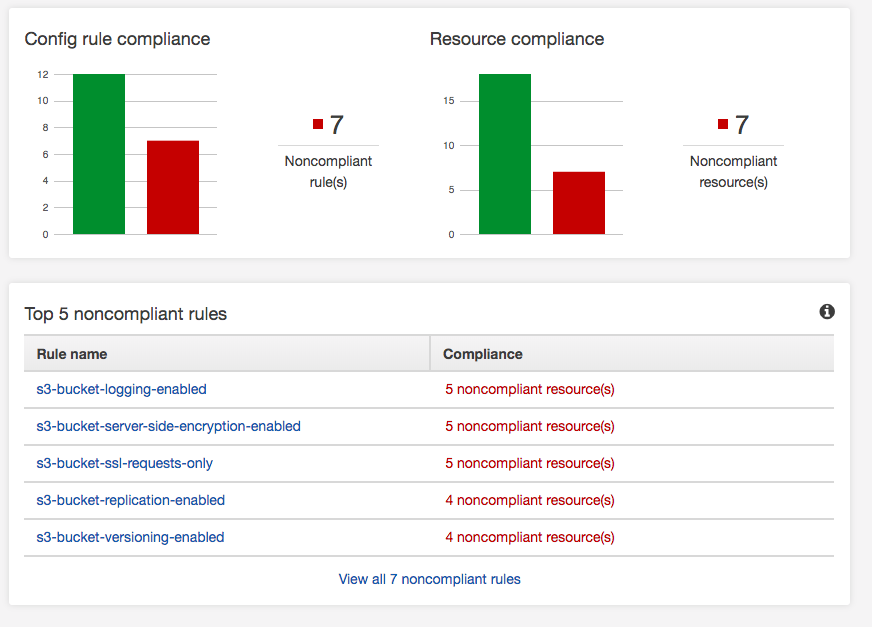

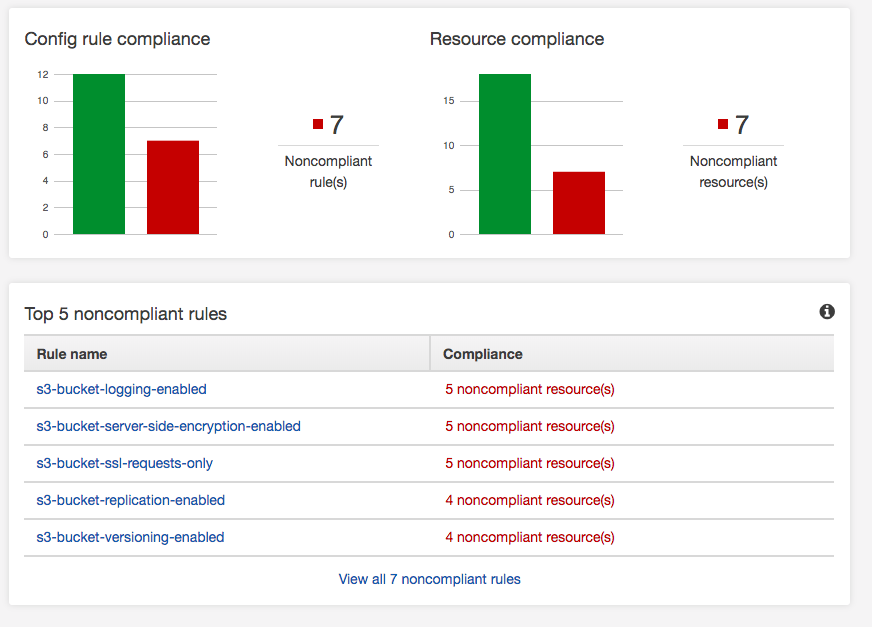

If you have an AWS deployment, make sure you turn on AWS Config. It has a whole bunch of built-in rules, and you can add your own to validate the security of your AWS environment as it relates to AWS services. Amazon provides good documentation, a GitHub repo, and SumoLogic does a quick How-to turn it on. It’s straightforward to turn on and use. AWS provides some pre-configured rules, and that’s what this AWS environment will validate against. There is a screenshot below of the results. Aside from turning it on, you have to decide which rules are valid for you. For instance, not all S3 buckets have business requirements to replicate, so I’d expect this to always be a noncompliant resource. However, one of my findings yesterday was missing EBS encrypted volumes. In order to make EBS volumes encrypted its 9 easy steps:

However, one of my findings yesterday was missing EBS encrypted volumes. In order to make EBS volumes encrypted its 9 easy steps:

-

Make a snapshot of the EBS Volumes.

-

Copy the snapshot of the EBS Volume to a Snapshot, but select encryption. Use the AWS KMS key you prefer or Amazon default aws/ebs.

-

Create an AMI image from the encrypted Snapshot.

-

Launch the AMI image from the encrypted Snapshot to create a new instance.

-

Check the new instance is functioning correctly, and there are no issues.

-

Update EIPs, load balancers, DNS, etc. to point to the new instance.

-

Stop the old un-encrypted instances.

-

Delete the un-encrypted snapshots.

-

Terminate the old un-encrypted instances.

Remember KMS gives you 20,000 request per month for free, then the service is billable.

If you have an AWS deployment, make sure you turn on AWS Config. It has a whole bunch of built-in rules, and you can add your own to validate the security of your AWS environment as it relates to AWS services. Amazon provides good documentation, a

Why do agencies post minimum security standards? The UK government recently released a minimum security standards document which all departments must meet or exceed. The document is available here: Minimum Cyber Security Standard.

The document is concise, short, and clear. It contains some relevant items for decent security, covering most common practices over the last 10 years. I’m not a UK citizen, but if agencies are protecting my data, why do they have to meet minimum standards. If an insurer was using the minimum standards, it would be “lowest acceptable criteria that a risk must meet in order to be insured”. Do I really want to be in that class lowest acceptable criteria for a risk to my data and privacy?

Given now, you know government agencies apply minimum standards, let’s look at breach data. Breaches are becoming more common and more expensive and this is confirmed by a report from Ponemon Institue commissioned by IBM. The report states that a Breach will cost $3.86 million, and the kicker is that there is a recurrence 27.8% of the time.

There two other figures in this report that astound me:

That means that after a company is breached, it takes on average 6 months to identify the breach and 2 months to contain it. The report goes on to say that 27% of the time a breach is due to human error and 25% of the time because of a system glitch.

So interpolate this, someone or system makes a mistake and it takes 6 months to identify and 2 months to contain. Those numbers should be scaring every CISO, CIO, CTO, other executives, security architects, as the biggest security threats are people and systems working for the company.

Maybe it’s time to move away from minimum standards and start forcing agencies and companies to adhere to a set of best practices for data security?

Why do agencies post minimum security standards? The UK government recently released a minimum security standards document which all departments must meet or exceed. The document is available here: Minimum Cyber Security Standard.

The document is concise, short, and clear. It contains some relevant items for decent security, covering...

awsarch went SSL.

Amazon offers free SSL certificates if your domain is hosted on an ELB, CloudFront, Elastic Beanstalk, API Gateway or AWS CloudFormation.

For more information on the Amazon ACM service.

So basically it required setting up an Application Load Balancer, updating DNS, making updates to .htaccess and a fix to the wp-config file.

Now the site is HTTPS and the weird non-HTTPs browser messages went away. Come July Chrome will start carrying a warning sign per this Verge Article.

Free SSL certificates can also be acquired here https://letsencrypt.org/

awsarch went SSL.

Amazon offers free SSL certificates if your domain is hosted on an ELB, CloudFront, Elastic Beanstalk, API Gateway or AWS CloudFormation.

For more information on the Amazon ACM service.

So basically it required setting up an Application Load Balancer, updating DNS, making updates to .htaccess and a...

However, one of my findings yesterday was missing EBS encrypted volumes. In order to make EBS volumes encrypted its 9 easy steps:

However, one of my findings yesterday was missing EBS encrypted volumes. In order to make EBS volumes encrypted its 9 easy steps: