Amazon recently released a presentation on Data-safe Cloud. It appears to be based on some Gartner question and other data AWS collected. The presentation discusses 6 core benefits of a secure cloud.

- Inherit Strong Security and Compliance Controls

- Scale with Enhanced Visibility and Control

- Protect Your Privacy and Data

- Find Trusted Security Partners and Solutions

- Use Automation to Improve Security and Save Time

- Continually Improve with Security Features.

I find this marketing material to be confusing at best, let’s analyze what it is saying.

For point 1, Inherit Strong and Compliance Controls, which reference all the compliance AWS achieves. However, it loses track of the shared responsibility model and doesn’t even mention until page 16. Amazon has compliance in place which is exceptional, and most data center operators or SaaS providers struggle to achieve. This does not mean my data or services running within the Amazon environment meet those compliances

For point 2, 4 and 6 those are not benefits of the secure cloud. Those might be high-level objects one uses to form a strategy on how to get to a secure cloud.

Point 3 I don’t even understand, the protection of privacy and data has to be the number one concern when building out workloads in the cloud or private data centers. It’s not a benefit of the secure cloud, but a requirement.

For point 5, I am a big fan of automation and automating everything. Again this is not a benefit of a secure cloud, but how to have a repeatable, secure process wrapped in automation which leads to a secure cloud.

Given the discussions around cloud and security given all the negative press, including the recent AWS S3 Godaddy Bucket exposure, Amazon should be publishing better content to help move forward the security discussion.

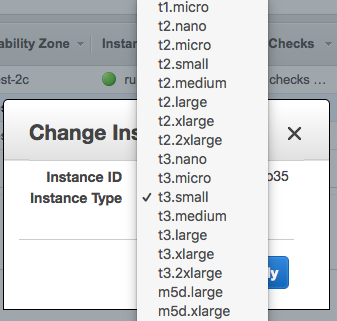

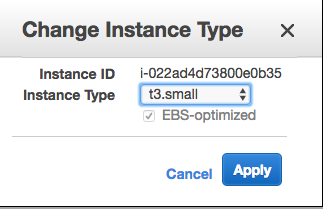

Change Instance

Change Instance T3 EBS Optimized

T3 EBS Optimized