Open source has been around for decades, but the real initiatives started in 1998. Due to some recent experiences, I started pondering open source and the future of software.

I believe the future of software is open source where a company which wraps enterprise support around it.

Take any open source software, if you need a feature typically someone has built it. If they haven’t, your team ends up creating it and adding it back to the project. Open source has the power of community vs a company with a product manager and deadlines to ship based on some roadmap built by committee. I made it too simple, open source has a product manager, really in most communities they are gate keeper. They own accepting features and setting direction, in some cases it’s the original developer like Linux, or sometimes it’s a committee, However, at the end of the day either commercial or open source has a product owner.

It’s an interesting paradux which created two opposing questions. First, why isn’t all software open sourced? Why would a company who has spent millions In development going to give the software away and charge for services?

The answer to the first question is see question two. The answer to the second question is giving away software is not financially viable if millions have been invested unless a robust software support model is supporting the development of software.

I worked for many organizations who’s IT budget was lean and agile, Open source was was minimal budget dollars. I have worked for other organizations whose budget is exceptionally robust and requires supported software as part of governance.

Why not replace the license model with a support model, and allow me or even more importantly the community access to the source code, contribute and drive innovation. Based on users, revenue or some other metric charge me for support or allow me to opt out. Seems like a reasonable future to me.

Open source has been around for decades, but the real initiatives started in 1998. Due to some recent experiences, I started pondering open source and the future of software.

I believe the future of software is open source where a company which wraps enterprise support around it.

Take any open...

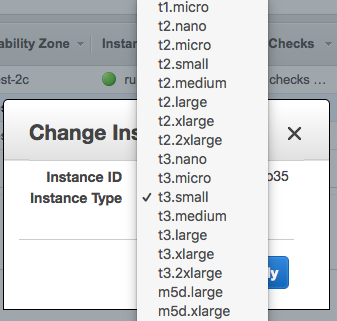

Change Instance

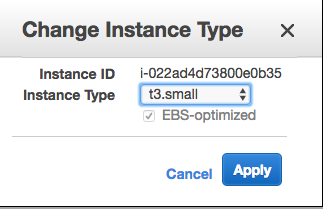

Change Instance T3 EBS Optimized

T3 EBS Optimized