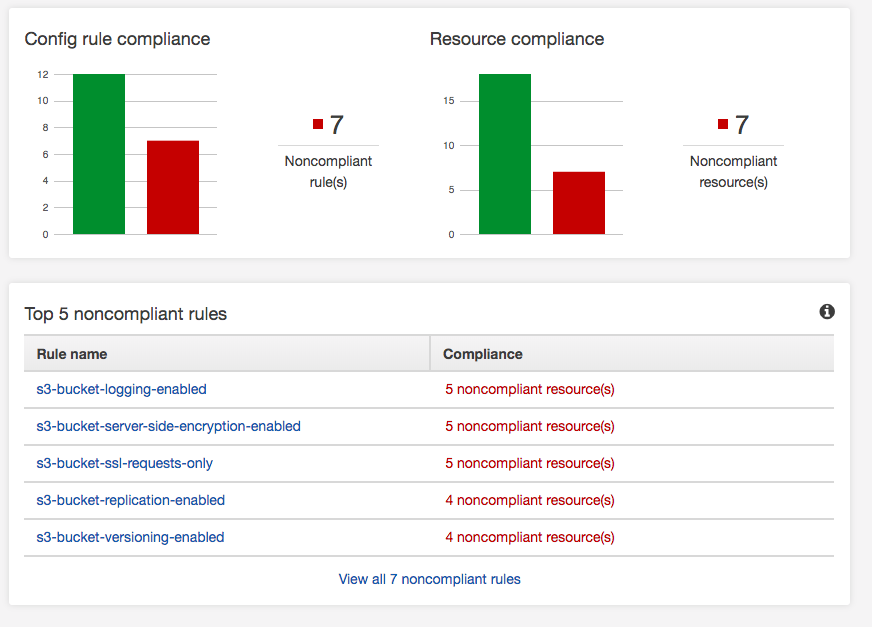

If you have an AWS deployment, make sure you turn on AWS Config. It has a whole bunch of built-in rules, and you can add your own to validate the security of your AWS environment as it relates to AWS services. Amazon provides good documentation, a

Amazon is crashing on Prime Day, made breaking news. Appears the company is having issues with the traffic load.

Given Amazon runs from AWS as of 2011. Not a great sign for either Amazon or the scalability model they deployed on AWS.

Amazon is crashing on Prime Day, made breaking news. Appears the company is having issues with the traffic load.

Given Amazon runs from AWS as of 2011. Not a great sign for either Amazon or the scalability model they deployed on...

Why do agencies post minimum security standards? The UK government recently released a minimum security standards document which all departments must meet or exceed. The document is available here: Minimum Cyber Security Standard.

The document is concise, short, and clear. It contains some relevant items for decent security, covering most common practices over the last 10 years. I’m not a UK citizen, but if agencies are protecting my data, why do they have to meet minimum standards. If an insurer was using the minimum standards, it would be “lowest acceptable criteria that a risk must meet in order to be insured”. Do I really want to be in that class lowest acceptable criteria for a risk to my data and privacy?

Given now, you know government agencies apply minimum standards, let’s look at breach data. Breaches are becoming more common and more expensive and this is confirmed by a report from Ponemon Institue commissioned by IBM. The report states that a Breach will cost $3.86 million, and the kicker is that there is a recurrence 27.8% of the time.

There two other figures in this report that astound me:

That means that after a company is breached, it takes on average 6 months to identify the breach and 2 months to contain it. The report goes on to say that 27% of the time a breach is due to human error and 25% of the time because of a system glitch.

So interpolate this, someone or system makes a mistake and it takes 6 months to identify and 2 months to contain. Those numbers should be scaring every CISO, CIO, CTO, other executives, security architects, as the biggest security threats are people and systems working for the company.

Maybe it’s time to move away from minimum standards and start forcing agencies and companies to adhere to a set of best practices for data security?

Why do agencies post minimum security standards? The UK government recently released a minimum security standards document which all departments must meet or exceed. The document is available here: Minimum Cyber Security Standard.

The document is concise, short, and clear. It contains some relevant items for decent security, covering...

Let’s start with some basics of software development. It still seems no matter what methodology of software development lifecycle that is followed it includes some level of definition, development, QA, UAT, and Production Release. Somewhere in the process, there is a merge of multiple items into a release. This still means your release to production could be monolithic.

The mighty big players like Google, Facebook, and Netflix (click any of them to see their development process) have revolutionized the concept of Continous Integration (CI) and Continous Deployment (CD).

I want to question the future of CI/CD, instead of consolidating a release, why not release a single item into production, validate over a defined period of time and push the next release. This entire process would happen automatically based on a queue (FIFO) system.

Taking it to the world of corporate IT and SaaS Platforms. I’m really thinking about software like Salesforce Commerce Cloud, or Oracle’s NetSuite. I would want the SaaS platform to provide me this FIFO system to load my user code updates. The system would push and update the existing code, while it continues to handle the requests and the users wouldn’t see discrepancies. Some validation would happen, the code would activate and a timer would start on the next release. If validation failed the code could be rolled back automatically or manually.

Could this be a reality?

Let’s start with some basics of software development. It still seems no matter what methodology of software development lifecycle that is followed it includes some level of definition, development, QA, UAT, and Production Release. Somewhere in the process, there is a merge of multiple items into a release....

IPv6 implemented Anycast for many benefits. The premise behind Anycast is multiple nodes can share the same address, and the network routes the traffic to the Anycast interface address closest to the nearest neighbor.

There is a lot of information on it for the Internet as it relates to IPv6. Starting with a deep dive in the RFC RFC 4291 - IP Version 6 Addressing Architecture. Also, there is a document on Cisco Information IPv6 Configuration Guide.

The more interesting item which was a technical interview topic this week was the extension into IPv4. The basic premise is that BGP can have multiple subnets in different geographic regions with the same IP address and because of how internet routing works, traffic to that address is routed to the closest address based on BGP path.

However, this presents two issues if the path in BGP disappears that means the traffic would end up at another node, which would present state issues. The other issues are with BGP as it routes based on path length. So depending on how upstream ISP is peered and routed, a node physically closer, could not be in the preferred path and therefore add latency.

One of the concepts behind this is DDoS Mitigation, which is deployed with the Root Name Servers and also CDN providers. Several RFC papers discuss Anycast as a possible DDoS Mitigation technique:

RFC 7094 - Architectural Considerations of IP Anycast

RFC 4786 - Operation of Anycast Services

CloudFlare(a CDN provider) discusses their Anycast Solution: What is Anycast.

Finally, I’m a big advocate of conference papers, maybe because of my Master’s degree or 20 years ago if you wanted to learn something it was either from a book or post-conference proceedings. In the research, for this blog article, I came across a well-written research paper from 2015 on the topic of DDoS mitigation with Anycast Characterizing IPv4 Anycast Adoption and Deployment. It’s definitely worth a read, and especially on interesting how Anycast has been deployed to protect the Root DNS servers and CDNs.

IPv6 implemented Anycast for many benefits. The premise behind Anycast is multiple nodes can share the same address, and the network routes the traffic to the Anycast interface address closest to the nearest neighbor.

There is a lot of information on it for the Internet as it relates to IPv6. ...

I see a lot of trends between Containers in 2018 and the server virtualization movement started in 2001 with VMWare. So I started taking a trip down memory lane. My history started in 2003/2004 when I was leveraging Virtualization for datacenter and server consolation. At IBM we were pushing it to consolidate unused server capacity especially in test and development environments with IT leadership. The delivery primary focused on VMWare GSX and local storage initially. I recall the release of vMotion and additional Storage Virtualization tools, lead to a deserve to move from local storage to SAN-based storage. That allowed us to discuss the reduction of downtime and potential for production deployments. I also remember there was much buzz when EMC in 2004 acquired VMWare and it made sense given the push into Storage Virtualization.

Back then it was the promise of reduced cost, smaller data center footprint, improved development environments, and better resource utilization. Sounds like the promises of Cloud and Containers today.

I see a lot of trends between Containers in 2018 and the server virtualization movement started in 2001 with VMWare. So I started taking a trip down memory lane. My history started in 2003/2004 when I was leveraging Virtualization for datacenter and server consolation. At IBM we were pushing it...

Serverless is becoming the 2018 technology hype. I remember when containers were gaining traction in 2012, and Docker in 2013. At technology conventions, all the cool developers were using containers. It solved a lot of challenges, but it was not a silver bullet. (But that’s a blog article for another day.)

Today after an interview I was asking myself, have Containers lived up to the hype? They are great for CI/CD, getting rid of system administrator bottlenecks, helping with rapid deployment, and some would argue fundamental to DevOps. So I started researching the hype. People over at Cloud Foundry published a container report in 2017 and 2016.

Per the 2016 report, “our survey, a majority of companies (53%) had either deployed (22%) or were in the process of evaluating (31%) containers.”

Per the 2017 report, “increase of 14 points among users and evaluators for a total of 67 percent using (25%) or evaluating (42%).”

As a former technology VP/director/manager, I was always evaluating technology which had some potential to save costs, improve processes, speed development and improve production deployments. But a 25% adaption rate and a 3% uptick over last year, is not moving the technology needle.

However, I am starting to see the same trend, Serverless is the new exciting technology which is going to solve the development challenges, save costs, improve the development process and you are cool if you’re using it. But is it really Serverless or just a simpler way to use a container?

AWS Lambda is basically a container. (Another blog article will dig into the underpinnings of Lambda.) Where does the container run? ** A Server. **

Just means I don’t have to understand the underlying container, server etc.etc.etc. So is it truly serverless? Or is it just the 2018 technology hype to get all us development geeks excited, we don’t need to learn Docker or Kubernetes, or ask our Sysadmin friends provision us another server.

Let me know your thoughts.

Serverless is becoming the 2018 technology hype. I remember when containers were gaining traction in 2012, and Docker in 2013. At technology conventions, all the cool developers were using containers. It solved a lot of challenges, but it was not a silver bullet. (But that’s a blog article for another...

Got my CCNA certificate today via email. Far from the day of getting a beautiful package in the mail. The best is how Cisco lets you recertify after a long hiatus.

Got my CCNA certificate today via email. Far from the day of getting a beautiful package in the mail. The best is how Cisco lets you recertify after a long hiatus.

I think Certification logos are interesting, I would not include them in emails, but some do. Also, I would probably not include certifications in an email signature anymore.

Here are the ones I’ve collected in the last few weeks. I think moving forward, I’ll update the site header to...

This morning I sat and passed Cisco 200-301 Designing for Cisco Internetwork Solutions. The exam is not easy, it required an 860 to pass the exam. 17 years ago when I took it only required a 755. I got 844 17 years ago. This time I got an 884. It’s a tough exam as it requires deep and broad networking knowledge across all domains routing, switching, unified communications, WLANs and how to use them in network designs.

That exam officially gives me a CCDA. That officially makes 7 certifications (5 AWS and 2 Cisco) in 5 weeks.

Next up is the Cisco Exam for 300-101 ROUTE.

This morning I sat and passed Cisco 200-301 Designing for Cisco Internetwork Solutions. The exam is not easy, it required an 860 to pass the exam. 17 years ago when I took it only required a 755. I got 844 17 years ago. This time I...

Had a strong technical interview today. The interviewer asked questions about the topics in this outline.

-

Virtualization and Hypervisors

-

Security

-

Docker and Kubernetes

-

OpenStack/CloudStack - which I lack experience

-

Chef

- Security of data - see Databags

-

CloudFormation - immutable infrastructure

-

DNS

-

Bind

-

Route53

-

Anycast

- Resolvers routes to different servers

-

Cloud

-

Azure

-

Moving Monolithic application to AWS

-

Explain the underpinnings of IaaS, PaaS, SaaS.

These were all great questions for a technical interview. The interviewer was easy to converse and it was much more of a great discussion than an interview. The breadth and depth of the interview questions were impressive. I was very impressed with the answers to my questions by the interviewer. I left the interview hoping that the interviewer would become a colleague.

Had a strong technical interview today. The interviewer asked questions about the topics in this outline.

I passed the 200-125 CCNA exam today. Actually scored higher than I did 17 years ago. However the old CCNA covered much more material. Technically per Cisco guidelines it’s 3 - 5 days before I become officially certified.

Primarily I used VIRL to get the necessary hands-on experience and Ciscopress CCNA study guide. Wendell Odom always does a good job and his blog is beneficial in studying. The practice tests from MeasureUp are ok, but I wouldn’t get them again.

Next up the 200-310 DESGN.

I passed the 200-125 CCNA exam today. Actually scored higher than I did 17 years ago. However the old CCNA covered much more material. Technically per Cisco guidelines it’s 3 - 5 days before I become officially certified.

Primarily I used VIRL to get the necessary...

Last time, I started studying for Cisco Certifications, I built a 6 router one switch lab on my desk. One router had console ports for all the other routers, and the management port was connected to my home network so I could telnet into each of the routers via their console ports. It was exciting and a great way to learn and stimulate complex configurations. The routers had just enough power to run BGP and IPSec tunnels.

This time, I found VIRL, which is interesting as you build a network inside an Eclipse environment. On the backend, the simulator creates a network of multiple VMs.

So far, I built a simple switch network. I’m using it with the Cloud service Packet as the memory and CPU requirements exceed my laptop. Packet provides a bare-metal server which is required for how VIRL does a pxe-boot. I wish there was a bare-metal option on AWS.

I’m still trying to figure out how to upload complex configurations and troubleshoot them.

The product is very interesting as it provides a learning environment for a few hundred dollars vs. the couple thousand which I spent last time to build my lab.

Last time, I started studying for Cisco Certifications, I built a 6 router one switch lab on my desk. One router had console ports for all the other routers, and the management port was connected to my home network so I could telnet into each of the routers via their...

I had a goal 4 weeks ago, to pass 5 AWS certifications in 4 weeks. I completed this goal:

-

AWS Certified Solutions Architect – Associate

-

AWS Certified Developer – Associate

-

AWS Certified SysOps Administrator – Associate

-

AWS Certified Advanced Networking – Specialty

-

AWS Certified Solutions Architect – Professional

For the time being, I’m going to be done with AWS certifications, unless I get a position which leverages AWS. This week I made a list of the certifications that I will look at over the coming year with a goal to complete all of them by August of 2019. I still have a fall semester to finish, so I’ll stop certifications at the end of August until December to focus on finishing my masters.

The list of Certifications I made.

-

Azure

-

GCP

-

CISSP

-

CISM

-

Cisco

-

TOGAF

-

ITIL

-

Linux Certification

Anyone know of any other ones to pursue? Think it’s a good list for a Solution Architect as it has a broad range of cloud technologies, networking, and security.

I have decided, that my next challenge will be 2 Cisco Certifications in the next 2 weeks. After that, we’ll see what is next on the list.

I had a goal 4 weeks ago, to pass 5 AWS certifications in 4 weeks. I completed this goal:

-

AWS Certified Solutions Architect – Associate

-

AWS Certified Developer – Associate

-

AWS Certified SysOps Administrator – Associate

-

AWS Certified Advanced Networking...

I sat the AWS Certified Solutions Architect - Professional exam this morning. This exam is hard, probably the hardest of the AWS exams I have taken to date. I did it in about half the allowed time. Generally, the test is challenging as it covers a lot of topics and each answer always had two correct choices. The entire exam is a challenge to pick the more correct answer based on the scenario and question with a driving factor of one more or more of the following, scalability, cost, recovery time, performance or security.

I felt like I passed the exam while doing it, but its always a relief to see:

Congratulations! You have successfully completed the AWS Certified Solutions Architect - Professional exam and you are now AWS Certified.

Here is my score breakdown from the exam.

Topic Level Scoring:

| 1.0 |

High Availability and Business Continuity: |

81% |

| 2.0 |

Costing: |

75% |

| 3.0 |

Deployment Management: |

85% |

| 4.0 |

Network Design: |

85% |

| 5.0 |

Data Storage: |

81% |

| 6.0 |

Security: |

85% |

| 7.0 |

Scalability & Elasticity: |

63% |

| 8.0 |

Cloud Migration & Hybrid Architecture: |

57% |

I sat the AWS Certified Solutions Architect - Professional exam this morning. This exam is hard, probably the hardest of the AWS exams I have taken to date. I did it in about half the allowed time. Generally, the test is challenging as it covers a lot of topics and...

The best part of interviewing is when you spend a day with people who are skilled, interested and have great discussions. I spent Friday in 5 one hour interviews which were great. The people genuinely liked the company and their contributions to the company and looking to add talented people to their team. I felt like I fit in, and would be a great place to work.

Never know what happens, but looking forward to the next steps.

The best part of interviewing is when you spend a day with people who are skilled, interested and have great discussions. I spent Friday in 5 one hour interviews which were great. The people genuinely liked the company and their contributions to the company and looking to add talented...

I sat the AWS Certified SysOps Administrator - Associate this morning. That makes two exams this week in 3 days.

The exam was a little bit harder than the two other Associate exams as it went a level deeper. It focused on CloudFormation, CloudWatch, and deployment strategies. There were nine questions I struggled with the right answer, as all nine had two good answers. There were about 35 questions I knew cold. There were three questions duplicated on the other associate exams. All of the network questions I was over-thinking, probably based on the networking exam this week. Given this, I wasn’t worried when I ended the test. However, it’s always a relief when you get the Congratulations! You have successfully completed the AWS Certified SysOps Administrator - Associate.

Within 10 minutes I got my score email:

Congratulations again on your achievement!

Overall Score: 84%

Topic Level Scoring:

| 1.0 |

Monitoring and Metrics: |

80% |

| 2.0 |

High Availability: |

83% |

| 3.0 |

Analysis: |

100% |

| 4.0 |

Deployment and Provisioning: |

100% |

| 5.0 |

Data Management: |

83% |

| 6.0 |

Security: |

100% |

| 7.0 |

Networking: |

42% |

The score reflected over thinking the networking questions. I wouldn’t recommend sitting two different exams in the same few days.

That make 4 AWS certifications in 3 weeks:

- AWS Certified SysOps Administrator - Associate

- AWS Certified Advanced Networking - Specialty

- AWS Certified Developer - Associate

- AWS Certified Solutions Architect - Associate (Released February 2018)

Guess now it’s time to focus on the last of the Amazon Certifications I’ll work on for now which is the AWS Certified Solutions Architect – Professional.

I sat the AWS Certified SysOps Administrator - Associate this morning. That makes two exams this week in 3 days.

The exam was a little bit harder than the two other Associate exams as it went a level deeper. It focused on CloudFormation, CloudWatch, and deployment strategies. There were...

Is DevOps the most overused word in technology right now?

The full definition from Wikipedia. Here what DevOps really is about. It about taking monolithic code with complex infrastructure supported by developers, operational personnel, testers, system administrators and simplifying it, monitoring it and taking automated corrective actions or notification.

It’s really about reducing resources who aren’t helping the business grow and using that headcount toward a position which can help revenue growth.

It’s done in 3 pieces.

Piece 1. The Infrastructure

It starts by simplifying the infrastructure build-out, whether it in the cloud where environments can be spun up and down instantly based on some known configuration like AWS CloudFormation, using Docker or Kubernettes. Recently, Function as a Service (FaaS), AWS Lambda, Google Cloud Functions or Azure Functions. This reduces reliance on a DBA, Unix or Windows System Administrator and Network Engineers. Now the developer has the space they need instantly. The developer can deploy their code quicker, which speeds time to market.

Piece 2. Re-use and Buy vs. Build

Piece 2 of this is the Re-use and Buy vs. Build. Meaning if someone has a service re-use it, don’t go building your own. An example is Auth0 for authentication and Google Maps for mapping locations or directions.

Piece 3. When building or creating software do it as Microservices.

To simplify it you are going to implement microservices. Basically, you create code that does one thing well. It’s small, efficient and manageable. It outputs JSON which can be parsed by upstream Services. The JSON can extend without causing issues to upstream Services. This now reduces the size of the code base a developer is touching, as it one service. It reduces regression testing footprint. So now the number of testers, unit tests, regression tests and integration tests have been shrunk. This means faster releases to production, and also means a reduction in resources.

You’re not doing DevOps if any of these conditions apply?

-

You have monolithic software you’ve put some web services in front of.

-

Developers are still asking to provision environments to work.

-

People are still doing capacity planning and analysis.

-

NewRelic (or any other system) is monitoring the environment, but no one is aware of what is happening.

-

Production pushes happen at most once a month because of the effort and amount of things which break.

Doing DevOps

-

Take the monolithic software and break it into web services.

-

Developers can provision environments per a Service Catalog as required.

-

Automate capacity analysis.

-

Automatic SLAs which trigger notifications and tickets.

-

NewRelic is monitoring the environment, and it providing data to systems which are self-correcting issues, and there are feedback loops on releases.

-

Consistently (multiple times a week) pushing to production to enhance the customer experience.

Is DevOps the most overused word in technology right now?

The full definition from Wikipedia. Here what DevOps really is about. It about taking monolithic code with complex infrastructure supported by developers, operational personnel, testers, system administrators and simplifying it, monitoring it and taking automated corrective actions or notification.

It’s really...

The material for the AWS Certified SysOps Administrator – Associate seems to be a lot of the material cover under the Associate Architect and Associate Developer. I would have thought the material more focus on setting up and troubleshooting issues with EC2, RDS, ELB, VPC etc. It also spends a lot of time looking at CloudWatch, but doesn’t really provide strategies for leveraging the logs. Studying was a combination of the acloud.guru and the official study guide, and the Amazon Whitepapers.

I took the AWS supplied practice test using a free test voucher and score the following:

| Congratulations! You have successfully completed the AWS Certified SysOps Administrator Associate - Practice Exam

|

| Overall Score: 90%

|

| Topic Level Scoring:

1.0 Monitoring and Metrics: 100%

2.0 High Availability: 100%

3.0 Analysis: 66%

4.0 Deployment and Provisioning: 100%

5.0 Data Management: 100%

6.0 Security: 100%

7.0 Networking: 66%

|

It interesting the networking score was so low as I just passed the Network Speciality.

This is the last Associate exam to pass for me. If I successfully pass it, I will begin the process of studying for the Certified Solution Architect - Professional. That will probably be my last AWS certification as I’ll look at either starting on something like TOGAF certification, Redhat or Linux Institute, Cisco, GCP or Azure, depending on where my interest lies in a few weeks.

The material for the AWS Certified SysOps Administrator – Associate seems to be a lot of the material cover under the Associate Architect and Associate Developer. I would have thought the material more focus on setting up and troubleshooting issues with EC2, RDS, ELB, VPC etc. It also spends a...

I passed the AWS Certified Advanced Networking – Specialty Exam this morning. The exam is hard. My career started with a networking as I had multiple Nortel and Cisco Certifications and was studying to the CCIE Lab back then. But over the last 12 years, I got away from networking. Doing this exam was going back to something I loved for a long time, as BGP, Networking, Load Balancers, WAF makes me excited.

My exam results

Topic Level Scoring:

1.0 Design and implement hybrid IT network architectures at scale: 75%

2.0 Design and implement AWS networks: 57%

3.0 Automate AWS tasks: 100%

4.0 Configure network integration with application services: 85%

5.0 Design and implement for security and compliance: 83%

6.0 Manage, optimize, and troubleshoot the network: 57%

I have limited experience with AWS networking prior to this exam. I had the standard things likes load balancers, VPCs, Elastic IPs and Route 53. This exam tests your knowledge of these areas and more. To prepare I used the acloud.guru course, also the book AWS Certified Advanced Networking Official Study Guide: Specialty Exam and the Udemy Practice Tests. With the course and book, I set up VPC peers, Endpoints, nat instances, gateways, CloudFront distributions. I put about 50 hours into doing the course, reading the book, doing various exercise, and studying etc.

Based on my experience the acloud.guru course is lacking the details on the ELBs, the WAF, private DNS, and implementation within CloudFormation. The book comes closer to the exam, but also doesn’t cover CloudFormation, WAF or ELBs as deep as the exam. The Udemy practice tests were close to the exam, but lack some of the more complex scenario questions.

I plan to sit the AWS Certified SysOps Administrator - Associate exam later this week.

I passed the AWS Certified Advanced Networking – Specialty Exam this morning. The exam is hard. My career started with a networking as I had multiple Nortel and Cisco Certifications and was studying to the CCIE Lab back then. But over the last 12 years, I got away from networking. ...

What’s up with interviewers asking about kubernetes experience lately? Two different interviewers raised the question today.

Kubernetes Is only 4 years old . GCP has supported it for a while. AWS released it in beta at Re:invent 2017 and it went general release June 5 2018. Azure went GA June 13, 2018.

So how widely deployed is it? Also if it is supposed to speed deployments, how complex can it be? How many hours to learn it?

Next week I will be learning it. Looking forward to answering these questions.

What’s up with interviewers asking about kubernetes experience lately? Two different interviewers raised the question today.

Kubernetes Is only 4 years old . GCP has supported it for a while. AWS released it in beta at Re:invent 2017 and it went general release June 5 2018. Azure went...

The pdf provided this:

The AWS Certified Solutions Architect - Associate (Released February 2018) (SAA-C01) has a scaled score between 100 and 1,000. The scaled score needed to pass the exam is 720.

I got a 932….

The pdf provided this:

The AWS Certified Solutions Architect - Associate (Released February 2018) (SAA-C01) has a scaled score between 100 and 1,000. The scaled score needed to pass the exam is 720.

I got a 932….

awsarch went SSL.

Amazon offers free SSL certificates if your domain is hosted on an ELB, CloudFront, Elastic Beanstalk, API Gateway or AWS CloudFormation.

For more information on the Amazon ACM service.

So basically it required setting up an Application Load Balancer, updating DNS, making updates to .htaccess and a fix to the wp-config file.

Now the site is HTTPS and the weird non-HTTPs browser messages went away. Come July Chrome will start carrying a warning sign per this Verge Article.

Free SSL certificates can also be acquired here https://letsencrypt.org/

awsarch went SSL.

Amazon offers free SSL certificates if your domain is hosted on an ELB, CloudFront, Elastic Beanstalk, API Gateway or AWS CloudFormation.

For more information on the Amazon ACM service.

So basically it required setting up an Application Load Balancer, updating DNS, making updates to .htaccess and a...

I sat the exam for the AWS Certified Developer - Associate this morning. I felt lucky as the system kept asking questions I knew in depth. There were only 4 questions I didn’t know the answer to and took an educated guess.

I did the exam in 20 minutes for 55 questions. I only review questions I flag, and I only flagged about 8 questions. I felt really lucky as the exam was playing to my knowledge of DynamoDB, S3, EC2, and IAM. There were other questions about Lambda, CloudFormation, CloudFront, and API calls. But the majority of the questions focused on 4 areas of AWS, I knew really well.

At the end of the exam, I got the Congratulations have successfully completed the AWS Certified Developer – Associate exam.

Also within 15 minutes, I got the email confirming my score:

Congratulations again on your achievement!

Overall Score: 90%

| 1.0 |

AWS Fundamentals: |

100% |

| 2.0 |

Designing and Developing: |

85% |

| 3.0 |

Deployment and Security: |

87% |

| 4.0 |

Debugging: |

100% |

I’m still waiting on my score from my Solution Architect - Associate Exam. In the meantime, I’ll get back to studying my AWS Networking Speciality.

I sat the exam for the AWS Certified Developer - Associate this morning. I felt lucky as the system kept asking questions I knew in depth. There were only 4 questions I didn’t know the answer to and took an educated guess.

I did the exam in 20 minutes...

AWS offers practices emails through PSI exams. Cost $20 and gives you 20 questions for practice. I did the exam today. Here is the results email.

| Congratulations! You have successfully completed the AWS Certified Developer Associate - Practice Exam

|

| Overall Score: 95%

|

| Topic Level Scoring:

1.0 AWS Fundamentals: 100%

2.0 Designing and Developing: 87%

3.0 Deployment and Security: 100%

4.0 Debugging: 100%

|

That’s a confidence builder going into the exam tomorrow morning.

AWS offers practices emails through PSI exams. Cost $20 and gives you 20 questions for practice. I did the exam today. Here is the results email.

| Congratulations! You have successfully completed the AWS Certified Developer Associate - Practice Exam |

| Overall Score: 95% |

...

I had scheduled the test for June 14 for AWS Certified Developer – Associate. I need to stop studying the Network information and finish studying for the developer exam. I had completed the https://acloud.guru/ course on Sunday. I decided to purchase AWS Certified Developer - Associate Guide: Your one-stop solution to passing the AWS developer’s certification

The book was good, it covers all the major topics for the associate developer certification, but it lacks hands-on lab and there are several errors in the mock exams.

I had scheduled the test for June 14 for AWS Certified Developer – Associate. I need to stop studying the Network information and finish studying for the developer exam. I had completed the https://acloud.guru/ course on Sunday. I decided to purchase AWS Certified Developer - Associate Guide: Your...

The number one problem with interviewers, recruiters, etc is the lack of follow-up. I refer to it as ghosting. At least have the curiosity to reach out even via email and say, thank you, but you’re not a fit.

Why do people ask about are you ok with a manager title? If you were a VP or director and now applying for a manager position. Do they really not think you can’t read the job title? I took time to apply for this position, research the company and prep for the phone call and this is the first question you’re going to ask me, is do I understand this is a manager position. Argh! Assume if I applied for the position there was something which interests me, ask me why this position?

This has happened a few times recently

-

_Have you ever been in an interview and half way thru are you thinking, does this person want to report to me or be the manager instead? _Decide this before you start interviewing people. It’s a waste of time.

-

_Have you ever been in an interview and half way thru you are thinking there is no way I want to manage this person? _Why would a company have this person interview you, are they trying to scare you away.

Best Technical Interview questions so far…

-

What is callback hell in Javascript? First I don’t know if you’re a good programmer why you’d even need to know what this is.

-

_What is the difference between inheritance in Python and Java? _Python natively supports multiple inheritances, whereas Java Class B would extend Class A, and could Class C extend class B.

-

How does a bash shell work?

-

_When to use swap and when not to use swap? _Ugh, the answer is it depends on the application.

The number one problem with interviewers, recruiters, etc is the lack of follow-up. I refer to it as ghosting. At least have the curiosity to reach out even via email and say, thank you, but you’re not a fit.

Why do people ask about are you ok with a manager...

Still waiting on the score from the AWS Certified Solution Architect – Associate exam.

However, I started also studying for the AWS Certified Advanced Network - Speciality.

I love networks and networking, especially VPNs and BGP. So I felt it was a good challenge as well as something I enjoyed doing. 12 years ago, I had multiple Cisco routers on my desk and would run BGP configurations, OSPF and EIGRP configurations. Maybe I need an AWS DirectConnect…..

Still waiting on the score from the AWS Certified Solution Architect – Associate exam.

However, I started also studying for the AWS Certified Advanced Network - Speciality.

I love networks and networking, especially VPNs and BGP. So I felt it was a good challenge as well as something I enjoyed...

I sat the AWS Certified Solution Architect - Associate exam. It was challenging as it covers a broad set of AWS services. I sat the February 2018 version which is the new one.

At the end of the exam, I got a Congratulations have successfully completed the AWS Certified Solution Architect - Associate exam.

I decided that I would complete the AWS Certified Developer - Associate next.

I sat the AWS Certified Solution Architect - Associate exam. It was challenging as it covers a broad set of AWS services. I sat the February 2018 version which is the new one.

At the end of the exam, I got a Congratulations have successfully completed the AWS Certified Solution...

AWS offers practices emails through PSI exams. Cost $20 and gives you 20 questions for practice. I did the practice exam today. Here is the results email.

| Thank you for taking the AWS Certified Solutions Architect - Associate - Practice (Released February 2018) exam. Please examine the following information to determine which topics may require additional preparation.

|

| Overall Score: 80%

|

| Topic Level Scoring:

1.0 Design Resilient Architectures: 100%

2.0 Define Performant Architectures: 71%

3.0 Specify Secure Applications and Architectures: 66%

4.0 Design Cost-Optimized Architectures: 50%

5.0 Define Operationally-Excellent Architectures: 100%

|

I was a little concerned after the practice exam. I spent the rest of the evening studying. There various blogs which talk about the exam, but it seems depending on the day, exam, the location you could need anywhere from a 65% to a 72% to pass the exam. Based on the practice I didn’t have a lot of room for error.

AWS offers practices emails through PSI exams. Cost $20 and gives you 20 questions for practice. I did the practice exam today. Here is the results email.

Thank you for taking the AWS Certified Solutions Architect - Associate - Practice (Released February 2018) exam. Please examine the...

I spent 24 hours feeling sorry for myself. I had already started studying the AWS Certified Solution Architect - Associate as I wanted to be prepared if I got an offer from Amazon. Now was their no reason to continue the preparation for the exam?

What was I going to do? I decided to continue to study for the exam, and credential myself. Possible with the end goal of either going back to work for Amazon or working somewhere else. Certification couldn’t hurt me.

It had been over a decade since I was last certified. At one point, I had multiple networking certifications from Nortel, my CCNA, CCDA, CCNP and was studying for a CCIE. The process is always hard, but I love learning. So I decided to push forward with my AWS certification.

I spent 24 hours feeling sorry for myself. I had already started studying the AWS Certified Solution Architect - Associate as I wanted to be prepared if I got an offer from Amazon. Now was their no reason to continue the preparation for the exam? What was I going...

Completed the Amazon interview on site. It’s a meeting with 5 Amazonians. The interviews are between 30 minutes and 45 minutes. They start promptly on time and end promptly on time. Each question is about telling a story and relating back to the Amazon Leadership Principles.

There were a lot of great questions during the session. It requires you to be detailed, communicate clearly and explain your answers.

There three questions which stood out:

-

What Leadership Principle do you associate the most with?

-

What Leadership Principle do you associate the least with or disagree with?

-

What was a decision you made wrong and why?

The other notable things I noticed, is all the interviewers loved their jobs and loved the culture of Amazon. Also, if the right amount of lean in, any idea could take shape and become part of AWS.

The other thing, I noticed is Amazon has a long lead process for developing Solution Architects. They want a person to know AWS before speaking with customers, which could take 6 to 9 months and require multiple AWS certifications. Also if you want to speak on behalf of Amazon, you have to get public speaking certified within Amazon.

It’s clear they want the smartest people with the most AWS knowledge.

Completed the Amazon interview on site. It’s a meeting with 5 Amazonians. The interviews are between 30 minutes and 45 minutes. They start promptly on time and end promptly on time. Each question is about telling a story and relating back to the Amazon Leadership Principles. There...

Amazon requires a writing sample of 2 pages about a topic they provide. To do this I wrote 3 paragraphs on 6 different key events from my career. Then I took 3 of those topics and made it a page long. Finally, I decided on which topic covered the most Amazon Leadership Principles and used that on for the final essay by elaborating and extending it to two full pages.

The writing sample has been submitted let’s see how it goes.

Let’s see how it goes.

Amazon requires a writing sample of 2 pages about a topic they provide. To do this I wrote 3 paragraphs on 6 different key events from my career. Then I took 3 of those topics and made it a page long. Finally, I decided on which topic covered the...

This is my blog about my experience becoming find a new position.

This started in May with the beginning of an interview process with Amazon.

I called it AWSARCH just because it’s a truly obscure name.

This is my blog about my experience becoming find a new position.

This started in May with the beginning of an interview process with Amazon.

I called it AWSARCH just because it’s a truly obscure name.

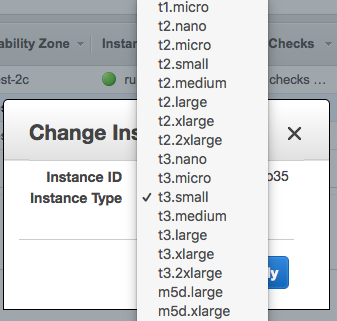

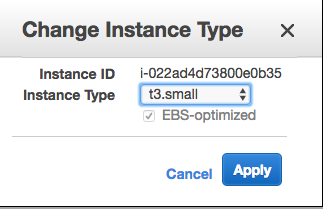

|

Change Instance

Change Instance T3 EBS Optimized

T3 EBS Optimized

However, one of my findings yesterday was missing EBS encrypted volumes. In order to make EBS volumes encrypted its 9 easy steps:

However, one of my findings yesterday was missing EBS encrypted volumes. In order to make EBS volumes encrypted its 9 easy steps: